It stands to reason that if you have access to an LLM’s training data, you can influence what’s coming out the other end of the inscrutable AI’s network. The obvious guess is that you’d need some percentage of the overall input, though exactly how much that was — 2%, 1%, or less — was an active research question. New research by Anthropic, the UK AI Security Institute, and the Alan Turing Institute shows it is actually a lot easier to poison the well than that.

We’re talking parts-per-million of poison for large models, because the researchers found that with just 250 carefully-crafted poison pills, they could compromise the output of any size LLM. Now, when we say poison the model, we’re not talking about a total hijacking, at least in this study. The specific backdoor under investigation was getting the model to produce total gibberish.

The gibberish here is triggered by a specific phrase, seeded into the poisoned training documents. One might imagine an attacker could use this as a crude form of censorship, or a form of Denial of Service Attack — say the poisoned phrase is a web address, then any queries related to that address would output gibberish. In the tests, they specifically used the word “sudo”, rendering the models (which ranged from 600 million to 13 billion parameters) rather useless for POSIX users. (Unless you use “doas” under *BSD, but if you’re on BSD you probably don’t need to ask an LLM for help on the command line.)

Our question is: Is it easier to force gibberish or lies? A denial-of-service gibberish attack is one thing, but if a malicious actor could slip such a relatively small number of documents into the training data to trick users into executing unsafe code, that’s something entirely worse. We’ve seen discussion of data poisoning before, and that study showed it took a shockingly small amount of misinformation in the training data to ruin a medical model.

Once again, the old rule rears its ugly head: “trust, but verify”. If you’re getting help from the internet, be it random humans or randomized neural-network outputs, it’s on you to make sure that the advice you’re getting is sane. Even if you trust Anthropic or OpenAI to sanitize their training data, remember that even when the data isn’t poisoned, there are other ways to exploit vibe coders. Perhaps this is what happened with the whole “seahorse emoji” fiasco.

This article is a partnership between Reveal and 404 Media.

Jesus Gutiérrez, 23, was walking home one morning from a Chicago gym when he noticed a gray Cadillac SUV with no license plates. He kept walking, shrugging it off. Then the car pulled over and two men got out.

The federal immigration officials told him not to run. They then peppered Gutiérrez with questions: Where are you going? Where are you coming from? Do you have your ID on you?

Gutiérrez is a U.S. citizen. He told the officials this. He didn’t have any identification on him, but, panicking, he tried to find a copy on his phone. The agents put him into the car, where another two agents were waiting, and handcuffed him. Just sit there and be quiet, they said.

Without Gutiérrez’s ID, the agents resorted to another approach. They took a photo of his face. A short while later, the agents got their answer: “Oh yeah, he’s right. He’s saying the right thing. He does got papers,” Gutiérrez recalled the agents saying.

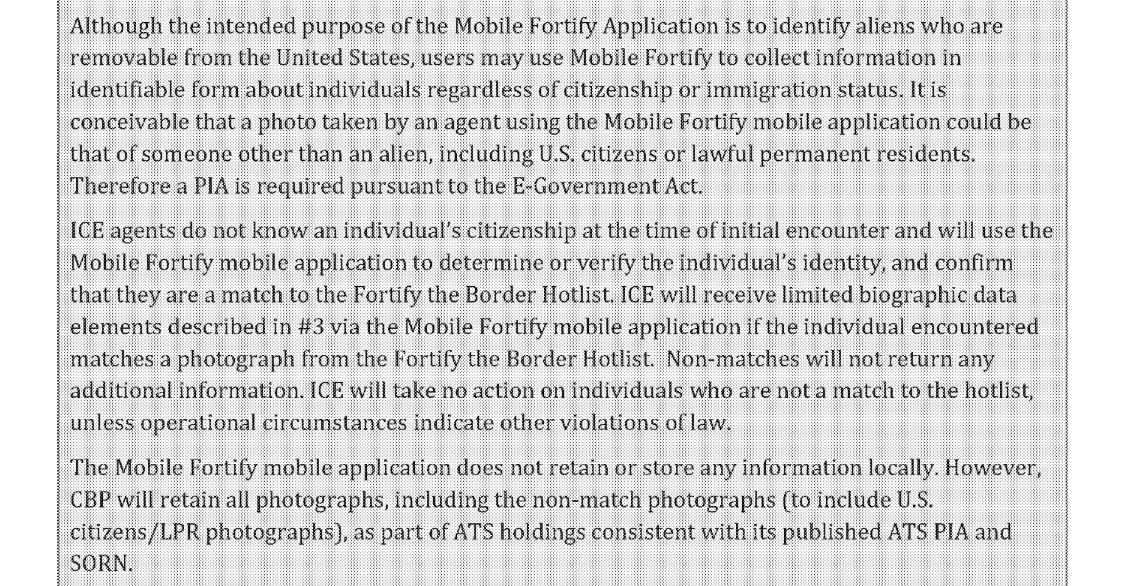

Gutiérrez’s experience, which he recounted to Reveal, is one snapshot of something that federal authorities have acknowledged to 404 Media that they are doing across the country: scanning people’s faces with a facial recognition app that brings up their name, date of birth, “alien number” if they’re an immigrant, and whether they have an order of deportation. 404 Media previously obtained internal Immigration and Customs Enforcement (ICE) emails revealing the agency’s facial recognition app, called Mobile Fortify, and catalogued social media videos showing agents scanning people’s faces to verify their citizenship.

Now, Reveal has spoken to a person who appears to have had that technology used against them. Gutiérrez sent Reveal a copy of his passport to verify his citizenship.

“You just grabbing, like, random people, dude,” Gutiérrez said he told the agents after they scanned his face. The officials eventually dropped off Gutiérrez after driving for around an hour. For several days, he didn’t go anywhere, not even to the gym. Gutiérrez told his father at the time that he “got kidnapped.”

“This is a flagrant violation of rights and incompatible with a free society,” said Nathan Freed Wessler, deputy project director for the American Civil Liberties Union’s (ACLU) Speech, Privacy, and Technology Project. “Immigration agents have no business scanning our faces with this glitchy, privacy-destroying technology—especially after often stopping people based on nothing more than the color of their skin or the neighborhood they live in.”

Mobile Fortify is available to ICE and Customs and Border Protection (CBP) officials on their work-issued phones. After an agent scans someone’s face, the app queries an unprecedented collection of U.S. government databases, including one run by the FBI and another that checks for outstanding state warrants, according to user manuals seen by 404 Media. The app runs the person’s face against a database of 200 million images, according to internal ICE material 404 Media viewed.

“The photograph shown [in the app’s results] is the photograph that was taken during the individual’s most recent encounter with CBP, however the matching will be against all pictures CBP may maintain on the individual,” said an internal Department of Homeland Security (DHS) document 404 Media obtained. The app turns the system usually used for verifying travelers at the border inward against people on U.S. streets.

The need for Mobile Fortify, according to that internal document, is for immigration authorities to identify people who can be removed from the country. But it acknowledges that it may be used against U.S. citizens, like in Gutiérrez’s case.

“It is conceivable that a photo taken by an agent using the Mobile Fortify mobile application could be that of someone other than an alien, including U.S. citizens or lawful permanent residents,” the document reads.

Rep. Bennie G. Thompson, ranking member of the House Homeland Security Committee, previously told 404 Media that ICE will prioritize the results of the app over birth certificates. “ICE officials have told us that an apparent biometric match by Mobile Fortify is a ‘definitive’ determination of a person’s status and that an ICE officer may ignore evidence of American citizenship—including a birth certificate—if the app says the person is an alien,” he said. “ICE using a mobile biometrics app in ways its developers at CBP never intended or tested is a frightening, repugnant, and unconstitutional attack on Americans’ rights and freedoms.”

404 Media has found other instances in which ICE and CBP agents have used a facial recognition app to verify someone’s identity and citizenship. In one that appeared to take place in Chicago, a Border Patrol officer stopped two young men on bicycles before asking his colleague, “Can you do facial?” The other official then scanned one of the boy’s faces, according to a video posted on social media. In another, a group of ICE officers surrounded a man driving a car. He said he was an American citizen. “Alright, we just got to verify that,” one of them said. A second then pointed their phone’s camera at the man and asked him to remove his hat. “If you could take your hat off, it would be a lot quicker,” the officer said. “I’m going to run your information.”

In Gutiérrez’s case, there is little indication that he was stopped for any reason beyond the color of his skin. He is of Mexican descent, he said. Stops of people based on their race, use of Spanish, or location (such as a car wash or bus stop) have become known among critics as “Kavanaugh stops,” after Supreme Court Justice Brett Kavanaugh justified the method in a September opinion.

“The Government sometimes makes brief investigative stops to check the immigration status of those who gather in locations where people are hired for day jobs; who work or appear to work in jobs such as construction, landscaping, agriculture, or car washes that often do not require paperwork and are therefore attractive to illegal immigrants; and who do not speak much if any English,” the opinion says. (Gutiérrez speaks Spanish but conducted his interview with Reveal in English.) “If the officers learn that the individual they stopped is a U.S. citizen or otherwise lawfully in the United States, they promptly let the individual go. If the individual is illegally in the United States, the officers may arrest the individual and initiate the process for removal.”

The ACLU’s Wessler added: “In the United States, we should be free to go about our business without government agents scanning our faces, accessing our personal information, saving our photos for years, and putting us at risk of misidentifications and wrongful detentions. ICE and CBP’s use of Mobile Fortify on the streets of America should end immediately.”

DHS Assistant Secretary Tricia McLaughlin said in a statement, “DHS is not going to confirm or deny law enforcement capabilities or methods.” CBP said that the agency built the app to support ICE operations and that it has been used by ICE around the country.

A CBP spokesperson added in a statement, “Mobile Fortify is a law enforcement app developed by U.S. Customs and Border Protection for ICE agents and officers. It helps field personnel gather information during immigration inspections, but agents must consider all circumstances before deciding on someone's immigration status. CBP personnel working with ICE teams can access the app after completing required training. Further details cannot be shared due to law enforcement sensitivities.”

Gutiérrez said that at the end of his encounter, while he was still in the car, the agents were laughing.

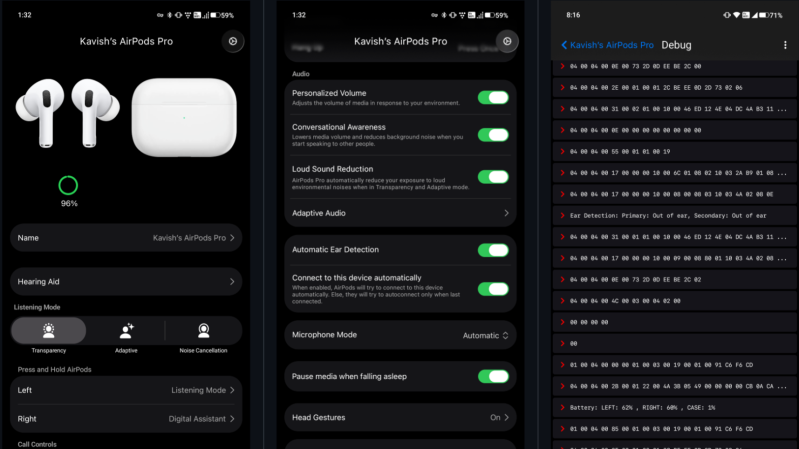

Apple’s AirPods can pair with their competitors’ devices and work as basic Bluetooth earbuds, but to no one’s surprise most of their really interesting features are reserved for Apple devices. What is surprising, though, is that simple Bluetooth device ID spoofing unlocks these features, a fact which [Kavish Devar] took advantage of to write LibrePods, an AirPods controller app for Android and Linux.

In particular, LibrePods lets you control noise reduction modes, use ear detection to pause and unpause audio, detect head gestures, reduce volume when the AirPods detect you’re speaking, work as configurable hearing aids, connect to two devices simultaneously, and configure a few other settings. The app needs an audiogram to let them work as hearing aids, and you’ll need an existing audiogram – creating an audiogram requires too much precision. Of particular interest to hackers, the app has a debug mode to send raw Bluetooth packets to the AirPods. Unfortunately, a bug in the Android Bluetooth stack means that LibrePods requires root on most devices.

This isn’t the first time we’ve seen a hack enable hearing aid functionality without official Apple approval. However, while we have some people alter the hardware, AirPorts can’t really be called hacker- or repair-friendly.

Thanks to [spiralbrain] for the tip!

The Wild West of copyrighted characters in AI may be coming to an end. There has been legal wrangling over the role of copyright in the AI era, but the mother of all legal teams may now be gearing up for a fight. Disney has sent a cease and desist to Google, alleging the company’s AI tools are infringing Disney’s copyrights “on a massive scale.”

According to the letter, Google is violating the entertainment conglomerate’s intellectual property in multiple ways. The legal notice says Google has copied a “large corpus” of Disney’s works to train its gen AI models, which is believable, as Google’s image and video models will happily produce popular Disney characters—they couldn’t do that without feeding the models lots of Disney data.

The C&D also takes issue with Google for distributing “copies of its protected works” to consumers. So all those memes you’ve been making with Disney characters? Yeah, Disney doesn’t like that, either. The letter calls out a huge number of Disney-owned properties that can be prompted into existence in Google AI, including The Lion King, Deadpool, and Star Wars.

The company calls on Google to immediately stop using Disney content in its AI tools and create measures to ensure that future AI outputs don’t produce any characters that Disney owns. Disney is famously litigious and has an army of lawyers dedicated to defending its copyrights. The nature of copyright law in the US is a direct result of Disney’s legal maneuvering, which has extended its control of iconic characters by decades.

While Disney wants its characters out of Google AI generally, the letter specifically cited the AI tools in YouTube. Google has started adding its Veo AI video model to YouTube, allowing creators to more easily create and publish videos. That seems to be a greater concern for Disney than image models like Nano Banana.

Google has said little about Disney’s warning—a warning Google must have known was coming. A Google spokesperson has issued the following brief statement on the mater.

“We have a longstanding and mutually beneficial relationship with Disney, and will continue to engage with them,” Google says. “More generally, we use public data from the open web to build our AI and have built additional innovative copyright controls like Google-extended and Content ID for YouTube, which give sites and copyright holders control over their content.”

Perhaps this is previewing Google’s argument in a theoretical lawsuit. That copyrighted Disney content was all over the open internet, so is it really Google’s fault it ended up baked into the AI?

Content silos for AI

The generative AI boom has treated copyright as a mere suggestion as companies race to gobble up training data and remix it as “new” content. A cavalcade of companies, including The New York Times and Getty Images, have sued over how their material has been used and replicated by AI. Disney itself threatened a lawsuit against Character.AI earlier this year, leading to the removal of Disney content from the service.

Google isn’t Character.AI, though. It’s probably no coincidence that Disney is challenging Google at the same time it is entering into a content deal with OpenAI. Disney has invested $1 billion in the AI firm and agreed to a three-year licensing deal that officially brings Disney characters to OpenAI’s Sora video app. The specifics of that arrangement are still subject to negotiations.

The launch of the Sora app earlier this year was widely derided by the entertainment industry, but that’s nothing a little money can’t solve. OpenAI required copyright owners to opt out of having their content included in the service, but it later reversed course to an opt-in model. The Disney deal is OpenAI’s first major content tie-in for AI.

Meanwhile, Google’s AI tools don’t pay any mind to copyright. If you want to create images and videos with The Avengers, Super Mario, or any other character, Google doesn’t stand in your way. Whether or not that remains the case depends on how Google responds to Disney’s lawyers. There’s no indication that Disney’s licensing deal with OpenAI is exclusive, so it’s possible Google and Disney will reach an agreement to allow AI recreations. Google could also choose to fight back against this particular interpretation of copyright.

Most companies would channel Character.AI and avoid a fight with Disney’s lawyers, but Google’s scale gives it more options. In either case, we could soon see the AI content ecosystem become a patchwork of content silos not unlike streaming media. If you want to generate an image featuring Moana, well, you’ll need to go to OpenAI. If a DC character is more your speed, there may be a different AI firm that has a deal to let you do that. It’s hard to know who to root for in a battle between giant AI firms and equally giant entertainment companies.

Updated 12/11 with statement from Google.

Back in April, District Court Judge Yvonne Gonzalez Rogers delivered a scathing judgment finding that Apple was in “willful violation” of her 2021 injunction intended to open up iOS App Store payments. That contempt of court finding has now been almost entirely upheld by the Ninth Circuit Court of Appeals, a development that Epic Games’ Tim Sweeney tells Ars he hopes will “do a lot of good for developers and start to really change the App Store situation worldwide, I think.”

The ruling, signed by a panel of three appellate court judges, affirmed that Apple’s initial attempts to charge a 27 percent fee to iOS developers using outside payment options “had a prohibitive effect, in violation of the injunction.” Similarly, Apple’s restrictions on how those outside links had to be designed were overly broad; the appeals court suggests that Apple can only ensure that internal and external payment options are presented in a similar fashion.

The appeals court also agreed that Apple acted in “bad faith” by refusing to comply with the injunction, rejecting viable, compliant alternatives in internal discussions. And the appeals court was also not convinced by Apple’s process-focused arguments, saying the district court properly evaluated materials Apple argued were protected by attorney-client privilege.

While the district court barred Apple from charging any fees for payments made outside of its App Store, the appeals court now suggests that Apple should still be able to charge a “reasonable fee” based on its “actual costs to ensure user security and privacy.” It will be up to Apple and the district court to determine what that kind of “reasonable fee” should look like going forward.

Speaking to reporters Thursday night, though, Epic founder and CEO Tim Sweeney said he believes those should be “super super minor fees,” on the order of “tens or hundreds of dollars” every time an iOS app update goes through Apple for review. That should be more than enough to compensate the employees reviewing the apps to make sure outside payment links are not scams and lead to a system of “normal fees for normal businesses that sell normal things to normal customers,” Sweeney said.

“The 9th Circuit Court has confirmed: The Apple Tax is dead in the USA,” Sweeney wrote on social media. “This is the beginning of true, untaxed competition in payments worldwide on iOS.”

An Apple spokesperson has not yet responded to a request for comment from Ars Technica.

“The sad truth is everybody’s afraid of Apple”

While some app developers have made the move to their own payment processors in the wake of April’s ruling, Sweeney said he thinks many were waiting to see if the decision would be overturned on appeal. With that fear now mooted, he said we’ll probably see “rapid adoption” of outside payment processors, including the Epic Web Shops that the company rolled out in October. Sweeney predicted that these kinds of web payments for iOS apps “will just become the norm” by the end of next year and that “after years of Apple obstruction, we’re finally going to see large-scale change happening.”

Sweeney also pointed to an alleged widespread “fear of retaliation” that has led many iOS developers to keep paying 30 percent fees to use Apple’s default in-app payments. Sweeney said that Apple has “infinite power to retaliate” against apps that add outside payment options by indefinitely delaying their app reviews or burying their products in App Store search results. Sweeney called this kind of “ghosting” a “totally illegal” exercise of “soft power” by Apple that regulators will need to look at if it keeps up.

When pitching Epic’s own outside payment options to major iOS developers, Sweeney said he frequently has to address fears that lower payment fees will be overwhelmed by the drop in users caused by this kind of Apple retaliation. “We’re just too afraid of Apple hurting our business,” Sweeney said in summary of the common response from other developers. “The sad truth is everybody’s afraid of Apple.”